GOTC Cloud Native Summit

May 28 09:00:00-16:10:00

Producer: Keith Chan

Cloud Native Summit is CNCF's flagship conference, bringing together users and technical experts from the world's leading open source and cloud native communities. Meet at Cloud Native Summit China on the GOTC 2023 to discuss the future and technical direction of cloud native computing.

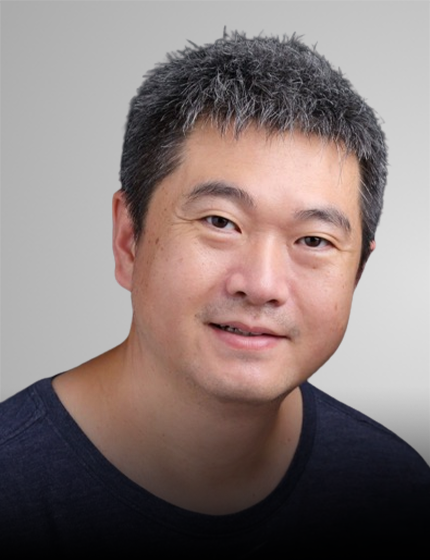

Keith Chan

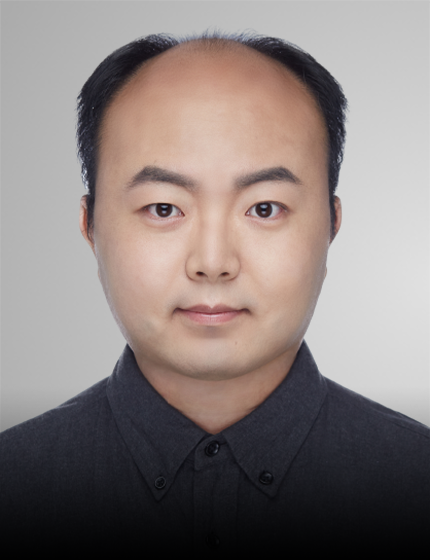

Keith Chan Bingshen Wang

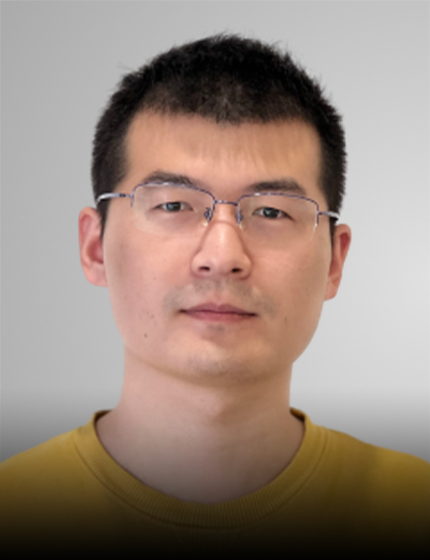

Bingshen Wang Wen Hu

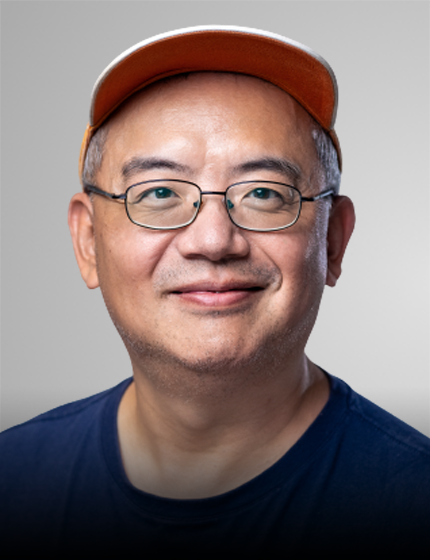

Wen Hu Michael Yuan

Michael Yuan Qiming Hu

Qiming Hu Xiaohui Zhang

Xiaohui Zhang Huailong Zhang

Huailong Zhang Shengli Liu

Shengli Liu Chenlin Liu

Chenlin Liu Liang Chang

Liang Chang Miao Zhang

Miao Zhang Jingxue Li

Jingxue Li Wei Cai

Wei Cai Rong Gu

Rong Gu /

/